Exploring how the relational features of robots impact children's engagement and learning

One challenge I've faced in my research is assessment. That's because some of the stuff I'd like to measure is hard to measure—namely, kids' relationships with robots.

During one study, the Tega robot asked kids to take a photo with it so it could remember them. We gave each kid a copy of their photo at the end of the study as a keepsake.

I study kids, learning, and how we can use social robots to help kids learn. The social robots I've worked with are fluffy, animated characters that are more akin to Disney sidekicks than to vacuum cleaners—Tega, and its predecessor, DragonBot. Both robots use Android phones to display an animated face; they squash and stretch as they move; they can playback sounds and respond to a variety of sensors.

In my work so far, I've found evidence that the social behaviors of the robot—such as its nonverbal behavior (e.g., gaze and posture), social contingency (e.g., performing the right social behaviors at the right times), and expressivity (such as using a very expressive voice versus a flat/boring one)—significantly impact how much kids learn, how engaged they are in the learning activities, and how credible they think the robot is.

I've also seen kids treat the robot as something kind of like a friend. As I've talked about before, kids treat the robot as something in between a pet, a tutor, and a technology. They show many social behaviors with robots—hugging, talking, tickling, giving presents, sharing stories, inviting to picnics—and they also show understanding that the robot can turn off and needs battery power to turn back on. In some of our studies, we've asked kids questions about the properties of the robot: Can it think? Can it break? Does it feel tickles? Kids' answers show that they understand that robot is a technological, human-made entity, but also that it shares properties with animate agents.

In many of our studies, we've deliberately tried to situate the robot as a peer. After all, one key way that children learn is through observing, cooperating with, and being in conflict with their peers. Putting the cute, fluffy robot in a peer-like role seemed natural. And over the past six years, I've seen kids mirror robots' behaviors and language use, learning from them the same way they learn from peers.

I began to wonder about the impact of the relational features of the robot on children's engagement and learning: that is, the stuff about the robot that influences children's relationships with the robot. These relational features include the social behaviors we have been investigating, as well as others: mirroring, entrainment, personalization, change over time in response to the interaction, references to a shared narrative, and more. Some teachers I've talked to have said that it's their relationship with their students that really matters in helping kids learn—what if the same was true with robots?

My hunch—one I'm exploring in my dissertation right now via a 12-week study at Boston-area schools—is that yes: kids' relationships with the robot do matter for learning.

But how do you measure that?

I dug into the literature. As it turns out, psychologists have observed and interviewed children, their parents, and their teachers about kids' peer relationships and friendship quality. There are also scales and questionnaires for assessing adults' relationships, personal space, empathy, and closeness to others.

I ran into two main problems. First, all of the work with kids involved assumptions about peer interactions that didn't hold with the robot. For example, several observation-based methodologies assumed that kids would be freely associating with other kids in a classroom. Frequency of contact and exclusivity were two variables they coded for (higher frequency and more exclusive contact meant the kids were more likely to be friends). Nope: Due to the setup of our experimental studies, kids only had the option of doing a fairly structured activity with the robot once a week, at specific times of the day.

The next problem was that all the work with adults assumed that the experimental subjects would be able to read. As you might imagine, five-year-olds aren't prime candidates for filling out written questionnaires full of "how do you feel about X, Y, or Z on a 1-5 scale." These kids are still working on language comprehension and self-reflection skills.

I found a lot of inspiration, though, including several gems that I thought could be adapted to work with my target age group of 4–6 year-olds. I ended up with an assortment of assessments that tap into a variety of methodologies: questions, interviews, activities, and observations.

We showed pictures of the robot to help kids choose an initial answer when asking some interview questions. These pictures were shown for the question, 'Let's pretend the robot didn't have any friends. Would the robot not mind or would the robot feel sad?'

We ask kids questions about how they think robots feel, trying to understand their perceptions of the robot as a social, relational agent. For example, one question was, "Does the robot really like you, or is the robot just pretending?" Another was, "Let's pretend the robot didn't have any friends. Would the robot not mind or would the robot feel sad?" For each question, we also ask kids to explain their answer, and whether they would feel the same way. This can reveal a lot about what criteria they use to determine whether the robot has social, relational qualities, such as having feelings, actions the robot takes, consequences of actions, or moral rules. For example, one boy thought the robot really liked him "because I'm nice" (i.e., because of the child's attributes), while another girl said the robot liked her "because I told her a story" (i.e., because of actions the child took).

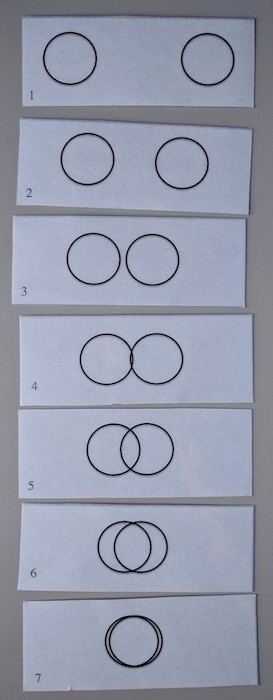

The set of circles used in our adapted Inclusion of Other in the Self task.

Some of these questions used pictorial response options, such as our adaptation of the Inclusion of Other in the Self scale. In this scale, kids are shown seven pairs of increasingly overlapping circles, and asked to point to the pair of circles that best shows their relationship with someone. We ask not only about the robot, but also about kids' parents, pets, best friends, and a bad guy in the movies. This lets us see how kids rate the robot in relation to other characters in their lives.

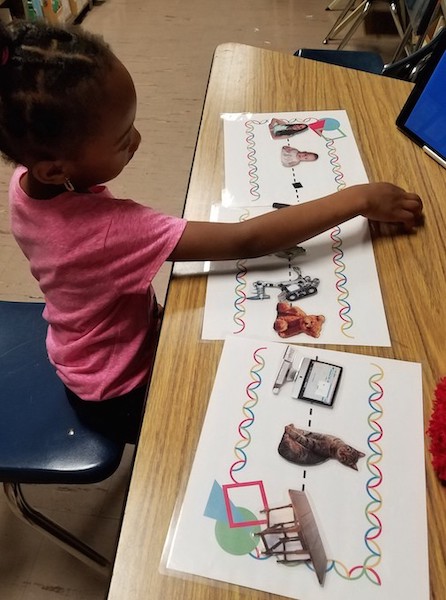

This girl is doing the Robot Sorting Task, in which she decides how much like a person each entity is and places each picture in an appropriate place along the line.

Another activity we created asks kids to sort a set of pictures of various entities along a line—entities such as a frog, a cat, a baby, a robot from a movie (like Baymax, WALL-e, or R2D2), a mechanical robot arm, Tega, and a computer. The line is anchored on one end with a picture of a human adult, and on the other with a picture of a table. We want to see not only where kids put Tega in relation to the other entities, but also what kids say as they sort them. Their explanations of why they place each entity where they do can reveal what qualities they consider important for being like a person: The ability to move? Talk? Think? Feel?

In the behavioral assessments, the robot or experimenter does something, and we observe what kids do in response. For example, when kids played with the robot, we had the robot disclose personal information, such as skills it was good or bad at, or how it felt about its appearance: "Did you know, I think I'm good at telling stories because I try hard to tell nice stories. I also think my blue fluffy hair is cool." Then the robot prompted for information disclosure in return. Because people tend to disclosure more information, and more personal or sensitive information, to people to whom they feel closer, we listened to see whether kids disclosed anything to the robot: "I'm good at reading," "I can ride a bike," "My teacher says I'm bad at listening."

Tega sports several stickers given to it by one child.

Another activity looked at conflict and kids' tendency to share (like they might with another child). The experimenter holds out a handful of stickers and tells the child and robot that they can each have one. The child is allowed to pick a sticker first. The robot says, "Hey! I want that sticker!" We observe to see if the child says anything or spontaneously offers up their sticker to the robot. (Don't worry: If the child does give the robot the sticker, the experimenter fishes a duplicate sticker out of her pocket for the child.)

Using this variety of assessments—rather than using only questions or only observations—can give us more insight into how kids think and feel. We can see if what kids say aligns with what kids do. We can get at the same concepts and questions from multiple angles, which may give us a more accurate picture of kids' relationships and conceptualizations.

Through the process of searching for assessments I need, discovering nothing quite right existed, and creating new ways of capturing kids' behaviors, feelings, and thoughts, the importance of assessment really hit home. Measurement and assessment is one of the most important things I do in research. I could ask any number of questions, hypothesize any number of outcomes, but without performing an experiment and actually measuring something relevant to my questions, I would get no answers.

We've just published a conference paper on our first pilot study validating four of these assessments. The assessments were able to capture differences in children's relationships with a social robot, as expected, as well as how their relationships change over time. If you study relationships with young kids (or simply want to learn more), check it out!

—

This article originally appeared on the MIT Media Lab website, May 2018

Acknowledgments

The research I talk about in this post was only possible with help from multiple collaborators, most notably Cynthia Breazeal, Hae Won Park, Randi Williams, and Paul Harris.

This research was supported by a MIT Media Lab Learning Innovation Fellowship and by the National Science Foundation. Any opinions, findings and conclusions, or recommendations expressed in this article are those of the authors and do not represent the views of the NSF.