Socially Assistive Robotics

This project was part of the Year 3 thrust for the Socially Assistive Robotics: An NSF Expedition in Computing grant, which I was involved in at MIT in the Personal Robots Group.

The overall mission of this expedition was to develop the computational techniques that could enable the design, implementation, and evaluation of "relational" robots, in order to encourage social, emotional, and cognitive growth in children, including those with social or cognitive deficits. The expedition aimed to increase the effectiveness of technology-based interventions in education and healthcare and to enhance the lives of children who may require specialized support in developing critical skills.

The Year 1 project targeted nutrition; Year 3 targeted language learning (that's this project!); Year 5 targeted social skills.

Second-language learning companions

This project was part of our effort at MIT to develop robotic second-language learning companions for preschool children. (We did other work in this area too: e.g., several projects looking at what design features positively impact children's learning as well as how children learn and interact over time.)

The project had two main goals. First, we wanted to test whether a socially assistive robot could help children learn new words in a foreign language (in this case, Spanish) more effectively by personalizing its affective/emotional feedback.

Second, we wanted to demonstrate that we could create and deploy an fully autonomous robotic system at a school for several months.

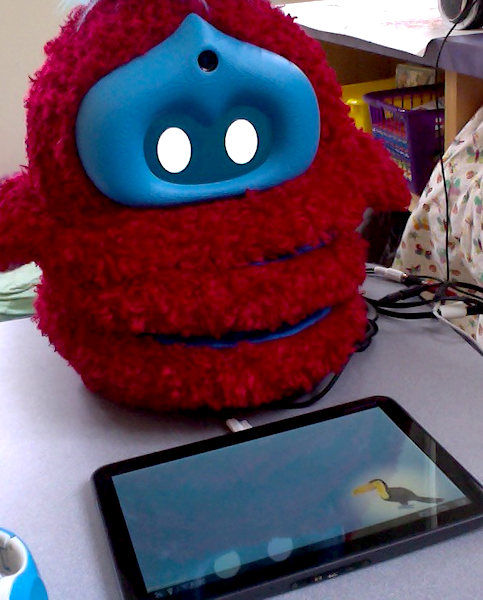

Tega Robot

We used the Tega robot. Designed and built in the Personal Robots Group, it's a squash-and-stretch robot specifically designed to be an expressive, friendly creature. An Android phone displays an animated face and runs control software. The phone's sensors can be used to capture audio and video, which we can stream to another computer so a teleoperator can figure out what the robot should do next, or, in other projects, as input for various behavior modules, such as speech entrainment or affect recognition. We can stream live human speech, with the pitch shifted up to sound more child-like, to play on the robot, or playback recorded audio files.

Here is a video showing one of the earlier versions of Tega. Here's research scientist Dr. Hae Won Park talking about Tega and some of our projects, with a newer version of the robot.

Language learning game

We created an interactive game that kids could play with a fully autonomous robot and the robot’s virtual sidekick, a Toucan shown on a tablet screen. The game was designed to support second language acquisition. The robot and the virtual agent each took on the role of a peer or learning companion and accompanied the child on a make-believe trip to Spain, where they learned new words in Spanish together.

Two aspects of the interaction were personalized to each child: (1) the content of the game (i.e., which words were presented), and (2) the robot's affective responses to the child's emotional state and performance.

This video shows the robot, game, and interaction.

Study

We conducted a 2-month study in three "special start" preschool classrooms at a public school in the Greater Boston Area. Thirty-four children ages 3-5, with 15 classified as special needs and 19 as typically developing, participated in the study.

The study took place over 9 sessions: Initial assessments, seven sessions playing the language learning game with the robot, and a final session with goodbyes with the robot and posttests.

We found that child learned new words presented during the interaction, children mimicked the robot's behavior, and that the robot's affective personalization led to greater positive responses from the children. This study provided evidence that children will engage a social robot as a peer over time, and personalizing a robot's behavior to children can lead to positive outcomes, such as greater liking of the interaction.

Links

- Video showing this project

- On IEEE Spectrum: Kids Love MIT's Latest Squishable Social Robot (Mostly)

- From NSF: Robot learning companion offers custom-tailored tutoring

- This work is discussed on the Personal Robots Group site and the MIT Media Lab site

- I wrote about this project for the MIT Media Lab blog: Making new (robot) friends: Understanding children's relationships with social robots.

- That blog post was republished on IEEE Spectrum: Robots for Kids: Designing Social Machines That Support Children's Learning.

Publications

-

Kory-Westlund, J., Gordon, G., Spaulding, S., Lee, J., Plummer, L., Martinez, M., Das, M., & Breazeal, C. (2015). Learning a Second Language with a Socially Assistive Robot. In Proceedings of New Friends: The 1st International Conference on Social Robots in Therapy and Education. (*equal contribution). [PDF]

-

Kory-Westlund, J. M., Lee, J., Plummer, L., Faridia, F., Gray, J., Berlin, M., Quintus-Bosz, H., Harmann, R., Hess, M., Dyer, S., dos Santos, K., Adalgeirsson, S., Gordon, G., Spaulding, S., Martinez, M., Das, M., Archie, M., Jeong, S., & Breazeal, C. (2016). Tega: A Social Robot. In S. Sabanovic, A. Paiva, Y. Nagai, & C. Bartneck, Proceedings of the 11th ACM/IEEE International Conference on Human-Robot Interaction: Video Presentations (pp. 561). Best Video Nominee. [PDF] [Video]

-

Gordon, G., Spaulding, S., Kory-Westlund, J., Lee, J., Plummer, L., Martinez, M., Das, M., & Breazeal, C. (2016). Affective Personalization of a Social Robot Tutor for Children's Second Language Skills. Proceedings of the 30th AAAI Conference on Artificial Intelligence. AAAI: Palo Alto, CA. [PDF]

-

Kory-Westlund, J. M., Gordon, G., Spaulding, S., Lee, J., Plummer, L., Martinez, M., Das, M., & Breazeal, C. (2016). Lessons From Teachers on Performing HRI Studies with Young Children in Schools. In S. Sabanovic, A. Paiva, Y. Nagai, & C. Bartneck (Eds.), Proceedings of the 11th ACM/IEEE International Conference on Human-Robot Interaction: alt.HRI (pp. 383-390). [PDF]